For the past year, we’ve been told that artificial intelligence is revolutionising productivity, helping us write emails, generate code, and summarise documents. But what if the reality of how people really use AI differs markedly from the promotional narrative?

A data-driven study by OpenRouter has pulled back the curtain on real-world AI usage, analysing over 100 trillion tokens-essentially billions of individual exchanges with large language models such as ChatGPT, Claude, and many others. The report’s data challenge common assumptions about how this technology is actually deployed and by whom.

OpenRouter is a multi-model AI inference platform that routes requests across more than 300 models from over 60 providers, including OpenAI and Anthropic, as well as open-source alternatives like DeepSeek and Meta’s LLaMA. The platform therefore provides a broad cross-section of model behaviour, system performance and usage patterns at scale.

With over 50% of usage originating outside the United States and millions of developers and users worldwide, OpenRouter’s dataset offers a rare view into how AI is used across geographies, use cases and company types. Importantly, the study analysed metadata from billions of interactions rather than the text of conversations, preserving user privacy while revealing trends and behavioural patterns.

Open-source AI models accounted for approximately one-third of total usage by late 2025, with notable spikes following major releases.

The roleplay revolution nobody saw coming.

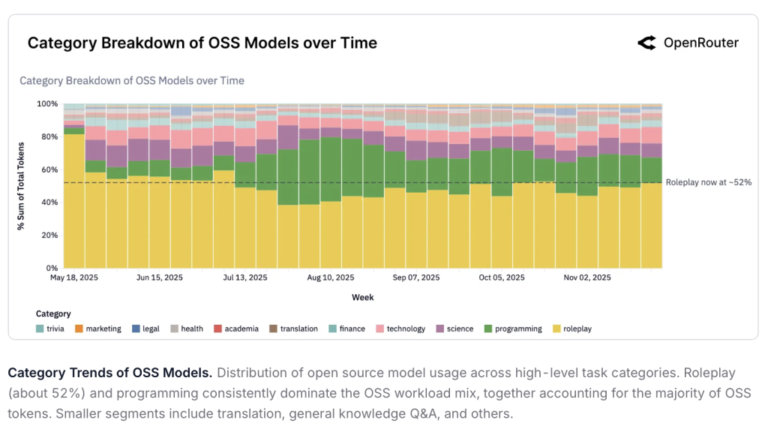

Perhaps the most surprising discovery in the OpenRouter dataset is that more than half of open-source AI model usage serves roleplay and creative storytelling, rather than conventional productivity tasks.

Put simply, while companies and researchers talk about automating work and information workflows, many people spend their time using models for character-driven conversation, interactive fiction and game-like scenarios. These are not throwaway chats – they are structured interactions that resemble small, persistent systems for narrative play and social simulation.

Over 50% of open-source model interactions fall into this category, a share that substantially exceeds the share of programming assistance within the same model set. (OpenRouter categorised “roleplay” using prompt keywords, conversational structure and tagging; see the report for complete methodology.)

“This counters an assumption that LLMs are mostly used for writing code, emails, or summaries,” the report states. “In reality, many users engage with these models for companionship or exploration.”

These sessions are often particular. About 60% of roleplay tokens belong to defined gaming scenarios and creative writing contexts – for example, a user might run a persistent fantasy campaign in which the model tracks state, NPCs, and plot threads across many messages. That kind of use looks less like casual conversation and more like a purpose-built tool for storytelling and play.

For product teams and developers: consider ways to support structured roleplay as a first-class use case – for instance, tooling to persist state, moderate content and export sessions for further editing or publication.

Programming’s meteoric rise

Although roleplay dominates much open-source usage, programming emerged as the fastest-growing category across the entire model ecosystem during 2025. At the start of the year, coding-related queries represented roughly 11% of total AI usage; by year‑end, that proportion had surged past 50%.

The shift reflects AI’s deeper integration into developer workflows and product engineering. Average prompt lengths for programming tasks increased about fourfold – from roughly 1,500 tokens to more than 6,000 – and the dataset includes individual requests exceeding 20,000 tokens. In practice, developers are no longer asking for single snippets: they are submitting multi-file projects, requesting architecture reviews, running extended debugging sessions and asking models to reason across entire modules of code.

These programming interactions are among the longest and most complex seen across systems. They typically involve multi-step tasks (for example, reproducing a failing test, locating the performance bottleneck, and proposing and implementing a fix) and often require the model to call external tools or reference persistent state across messages.

Anthropic’s Claude models captured the largest share of programming-related usage for much of 2025 – more than 60% in OpenRouter’s measurements – though competition from Google, OpenAI and several open-source models intensified through the year.

Programming-related queries exploded from 11% of total AI usage in early 2025 to over 50% by year’s end.

The Chinese AI surge

One of the most precise geographic shifts in the OpenRouter data is the rapid rise of Chinese models: by late 2025, they accounted for roughly 30% of global usage, up from about 13% at the start of the year – nearly triple in share over a single calendar year.

Providers such as DeepSeek, Qwen (from Alibaba) and Moonshot AI have attracted substantial traffic. DeepSeek alone processed 14.37 trillion tokens during the study period, underscoring how quickly regional models can scale when they match local language needs and product fit. This change marks a material rebalancing of the global model landscape, where Western incumbents are no longer the only dominant players.

Language and spending patterns shifted in step with provider share. Simplified Chinese is now the second-most common language for global interactions (about 5% of total usage), after English (about 83%). Asia’s share of AI spending more than doubled – from approximately 13% to 31% – and Singapore emerged as the second-largest country by usage after the United States. (OpenRouter identifies language by prompt and metadata; see the report for methodology.)

The rise of “Agentic” AI

The study highlights a defining shift in how models are used: agentic inference. In plain terms, agentic models do more than answer isolated questions – they plan, execute multi-step tasks, call external tools and sustain reasoning across extended sessions.

OpenRouter measured a sharp rise in interactions it classifies as “reasoning‑optimised,” from almost none in early 2025 to more than 50% by year‑end. That indicates a move away from simple text generation towards systems that behave like autonomous assistants, capable of chaining steps and maintaining state.

“The median LLM request is no longer a simple question or isolated instruction,” the researchers explain. “Instead, it is part of a structured, agent-like loop, invoking external tools, reasoning over state, and persisting across longer contexts.”

Agentic capability can be summarised in three practical ways:

- Planning and decomposition – breaking a high‑level problem into ordered subtasks;

- Tool invocation – calling code runners, databases, search or other APIs as part of the workflow;

- Stateful reasoning – remembering context across messages and iterating on results.

Examples from the dataset (anonymised): a developer asking a model to reproduce a failing test across multiple files, identify the performance bottleneck and propose code changes; a product team asking a model to evaluate user feedback, generate a prioritised roadmap and create a draft specification; a marketing user running a multi‑step campaign generator that assembles assets, schedules posts and summarises expected KPIs. These illustrate how agentic use cases span code, business processes and creative work.

There are real implications for security and systems design when models call external tools: access controls, audit logs and sandboxing become essential parts of any production system that uses agentic models. For engineering teams, best practices include least‑privilege tool access, clear provenance for automated actions, and human‑in‑the‑loop checkpoints for high‑risk tasks.

For further reading, consult engineering guidance on building safe agentic systems and OpenRouter’s methodology notes on how tool calls and reasoning‑optimised interactions were identified in the dataset.

The “Glass Slipper Effect”

One of the study’s most intriguing findings concerns user retention. Researchers describe a Cinderella-like “Glass Slipper” effect: when a model is first to solve a specific, high‑value problem, it creates disproportionately strong and lasting loyalty among early users.

In other words, being first to market is useful, but being first to solve a concrete pain point truly matters more. The report illustrates this with the June 2025 cohort of Google’s Gemini 2.5 Pro, which retained roughly 40% of its users by month five – a markedly higher retention rate than later cohorts (the study measures active user retention by cohort; see the report for exact definitions).

This pattern challenges simple assumptions about AI competition. Models that meet critical workflow needs become embedded in people’s daily systems and processes, increasing the technical and behavioural costs of switching to alternatives. Product and retention teams should therefore prioritise shipping solutions that demonstrably solve a high‑value problem rather than focusing solely on feature parity.

Cost doesn’t matter (as much as you’d think)

Counterintuitively, OpenRouter’s analysis suggests that AI usage is relatively price‑inelastic: a 10% price reduction corresponds to only a 0.5–0.7% increase in usage. In short, minor cuts to token costs do not automatically drive outsized demand.

Both premium and budget offerings coexist at scale. Premium models from Anthropic and OpenAI command price bands of roughly $2 to $35 per million tokens, while maintaining strong customer adoption among those who prioritise reasoning quality and reliability. At the same time, budget alternatives such as DeepSeek and Google’s Gemini Flash scale at under $0.40 per million tokens and serve cost‑sensitive workloads.

“The LLM market does not seem to behave like a commodity just yet,” the report concludes. “Users balance cost with reasoning quality, reliability, and breadth of capability.” That implies buyers assess trade-offs: enterprise systems and critical workflows often prefer higher‑quality, more reliable models despite higher per‑token costs, while bulk-generation tasks or low‑risk experiments tend to favour cheaper models.

For procurement and engineering teams, the practical takeaway is to evaluate models by total cost of ownership – not token price alone. Consider factors such as tool integration, uptime, reasoning accuracy, and ease of embedding into existing systems, and use experiment‑driven selection rather than assuming cheaper equals better for scale.

What this means going forward

The OpenRouter study sketches a far more nuanced picture of how people use AI than standard industry narratives imply. Yes, AI is reshaping programming and professional work, but it is also spawning new categories of human‑computer interaction – notably roleplay, creative generation and extended conversational systems.

Geography and language matter: the market is diversifying globally, with Chinese models and Asian spending surging, and Simplified Chinese emerging as a significant language of interaction alongside English. Technically, the ecosystem is shifting from single‑turn text generation to agentic, multi‑step reasoning where models act as persistent assistants across tasks and sessions.

Crucially, user behaviour and loyalty do not always follow expectations. The study notes that “ways in which people use LLMs do not always align with expectations and vary significantly country by country, state by state, use case by use case.” That variability matters for companies building systems and products: what works in one market or for one use case may not translate elsewhere.

Practical takeaways for product teams, developers and researchers:

- Measure real usage, not assumptions – instrument products to capture how users actually interact with models and what features they embed into workflows.

- Design for agentic workflows – support state persistence, safe tool integrations and audit trails so models can perform multi‑step tasks reliably.

- Prioritise solving high‑value problems – being “first to solve” a real need drives durable retention more than being first to market with incremental features.

Understanding these patterns — across models, use cases and countries — will be essential as AI becomes further embedded in daily life. The gap between how we expect AI to be used and how it is actually used remains substantial; this report is a helpful step toward closing it. For further reading, download the full OpenRouter report and its methodology notes to inspect the data and examples behind these findings.

Senior Reporter/Editor

Bio: Ugochukwu is a freelance journalist and Editor at AIbase.ng, with a strong professional focus on investigative reporting. He holds a degree in Mass Communication and brings extensive experience in news gathering, reporting, and editorial writing. With over a decade of active engagement across diverse news sources, he contributes in-depth analytical, practical, and expository articles that explore artificial intelligence and its real-world impact. His seasoned newsroom experience and well-established information networks provide AIbase.ng with credible, timely, and high-quality coverage of emerging AI developments.